Coral NPU is a machine learning (ML) accelerator core designed for energy-efficient AI at the edge. Based on the open hardware RISC-V ISA, it is available as validated open source IP, for commercial silicon integration.

Coral NPU's open-source strategy aims to create a standard architecture to accelerate the edge AI ecosystem and is based on the prior Google Research's effort, Coral.ai. First released in 2023 as a component of the "Open Se Cura" research project, it is now a dedicated initiative to drive this vision forward.

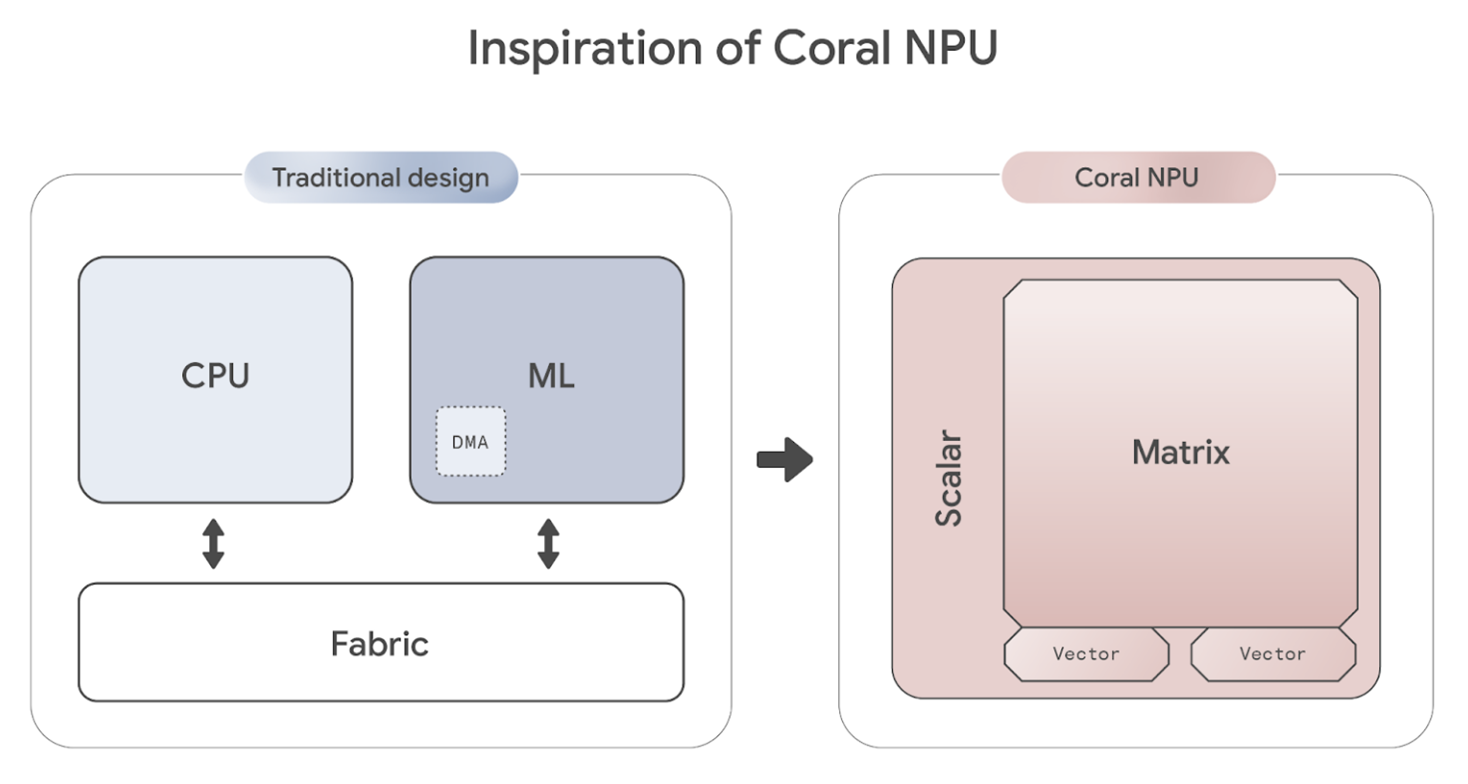

Coral NPU directly addresses the significant fragmentation in the edge AI device ecosystem. Developers currently face a steep learning curve and major programming complexity because programming models differ between separate general-purpose (CPU) and ML compute blocks. These ML blocks often rely on command buffers generated by specialized, proprietary compilers. This fragmented approach makes it difficult to combine the strengths of each compute unit and forces developers to manage multiple proprietary and opaque toolchains for bespoke architectures.

Coral NPU is built on the RISC-V ISA standard, extending the C programming environment with native tensor processing capabilities. It supports multiple machine learning frameworks including: JAX, PyTorch, and TensorFlow Lite (TFLite) using open standards based tools like Multi-Level Intermediate Representation (MLIR) from the Low Level Virtual Machine (LLVM) project for compiler infrastructure.

This integration of native ML acceleration primitives with a general purpose computing ISA, delivers high ML performance without the usual system complexity, cost, and data movement associated with separate, proprietary CPU/NPU designs.

Coral NPU's design is driven by several key principles:

ML-First Architecture: Coral NPU reverses the traditional processor design. Instead of starting with basic scalar computing, then adding vector (SIMD) and finally matrix capabilities, Coral NPU is built with matrix (ML) capabilities first, then integrates vector and scalar functions. This tight integration of scalar/vector/matrix in a single ISA approach optimizes the entire architecture for AI workloads from its foundation. (more details in the Architecture overview)

Dedicated ML Engine: At the center of the design is a quantized outer product multiply-accumulate (MAC) engine, purpose-built for the fundamental calculations of neural networks. This specialized core processes 8-bit operations into 32-bit results with extreme efficiency.

Integrated Vector (SIMD) Core: The vector co-processor implements the RISC-V Vector Instruction Set (RVV) v1.0, using a 32 x 256 bit vector register file and a "strip-mining" mechanism where a single instruction triggers multiple operations, significantly boosting efficiency.

Simple, C-Programmable Scalar Core: A lightweight RISC-V (RV32IM) frontend acts as a simple controller, managing and feeding the powerful Matrix and Vector backend. This core is designed for a "run-to-completion" model, meaning it doesn't require a complex operating system or frequent interrupts, contributing to its ultra-low power consumption.

Efficient Memory Management: Coral NPU uses a single layer of small, fast cache (8KB for instructions, 16KB for data) to keep data close to the processing units, minimizing power and latency.

Unified Developer Experience: The platform is C-programmable and designed for easy integration with modern ML compilers like TensorFlow Lite Micro (TFLM) and IREE. This allows a unified, MLIR-based toolchain to support models from major frameworks like TensorFlow, JAX, and PyTorch.

Coral NPU's design delivers a highly efficient balance of power and performance making it ideal for ambient applications and scalable to multicore setups.

Representative numbers:

Performance: targeting 512 GOP/S (Giga Operations Per Second) with 256 MACs/cycle

Power Objective: targeting ~6mW at 800MHz, using 22nm

More specific PPA metrics made available by commercial silicon partners.

Coral NPU is designed to enable ultra-low-power, always-on edge AI applications, particularly focused on ambient sensing systems. Its primary goal is to enable all day AI-experiences on wearable devices minimizing battery usage.

Potential Use Cases

Contextual Awareness: Detecting user activity (e.g., walking, running), proximity, or environment (e.g., indoors/outdoors, on-the-go) to enable "do-not-disturb" modes or other context-aware features.

Audio Processing: Voice and speech detection, keyword spotting, live translation, transcription, and audio-based accessibility features.

Image Processing: Person and object detection, facial recognition, gesture recognition, and low-power visual search.

User Interaction: Enabling control via hand gestures, audio cues, or other sensor-driven inputs.

Ideal Device Categories

Coral NPU's combination of high efficiency and low power makes it ideal for a wide range of hardware:

Hearables and smart earbuds

Smart glasses and AR headsets

Smartwatches and fitness trackers

Smart home and ambient IoT devices

Mobile phones (for ultra-low-power co-processing)

Automotive and in-vehicle systems

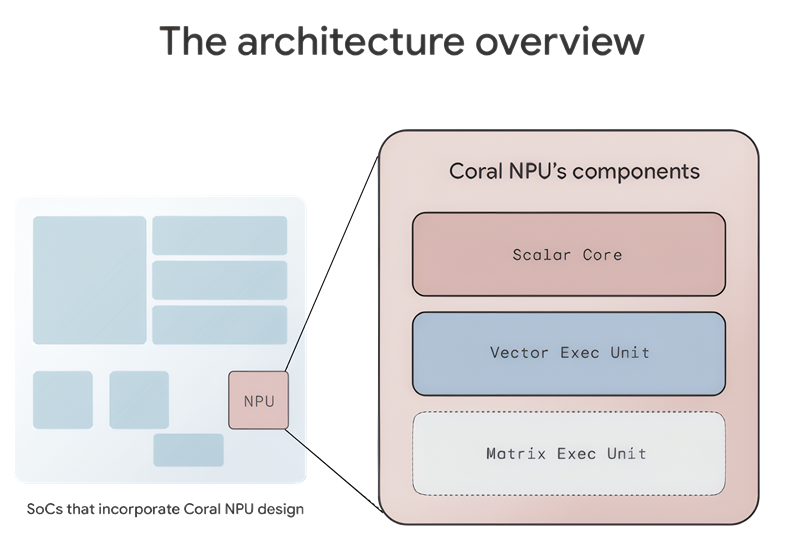

Coral NPU is a complete reference design for neural processing units (NPUs) based on the open RISC-V standard. Coral NPU is a composition consisting of three core components:

A scalar core for traditional CPU functions

A vector execution unit for additional computational features

A vector execution unit for additional computational features

Together, these three components provide the complete feature set and performance required for a Coral NPU-based NPU. Any chip vendor designing a discrete NPU or integrating Coral NPU into a System-on-Chip (SoC) will need to incorporate all three components for a complete solution.

The main features and functions offered by each of the components are the following:

Scalar Core

Serves as the in-order, non-speculative frontend processor.

Drives the command queues for the vector and matrix execution units.

Fully compliant with the open RISC-V 32-bit base ISA standard (RV32I).

Features 31 general-purpose scalar registers, each 32 bits wide.

Offers a C-programmable interface for managing loops, control flow, flexible type encodings, and instruction compression for the SIMD/vector backend.

Vector Execution Unit

Performs a wide range of vector and machine learning (ML) computations, including array operations, ML activation functions, and reductions.

Based on a Single Instruction, Multiple Data (SIMD) design.

Decoupled from the scalar frontend by a FIFO command queue, which buffers vector instructions.

Equipped with 64 vector registers, each 256 bits wide (e.g., capable of holding eight 32-bit integers).

Natively supports 8-bit, 16-bit, and 32-bit data widths.

Matrix Execution Unit

Accelerates matrix multiply-accumulate (Matmul) operations essential for ML, such as matrix multiplication and convolutions.

Features an outer-product engine capable of 256 multiply-accumulate (MAC) operations per cycle.

Status: currently under development and evaluation as part of the RISC-V matrix extension task group.

Refer to the Architecture Basics page for more details of each of these features.

For more information about Coral NPU, please click https://developers.google.com/coral.